Invisible Boxes

If you haven’t failed, then I probably don’t value your opinion all that much.

Why?

Well, to illustrate….

The First Game

You’re in a small, dimly lit room, seated at a cafeteria style table. Across from you is a youngish guy in a T-shirt. On the table are a stack of index cards and a cup of markers. To the side are two people in business casual.

One steps forward. “You’re here to deliver features to the user,” she says, “who is sitting across from you. A feature is an index card with numbers written on it.”

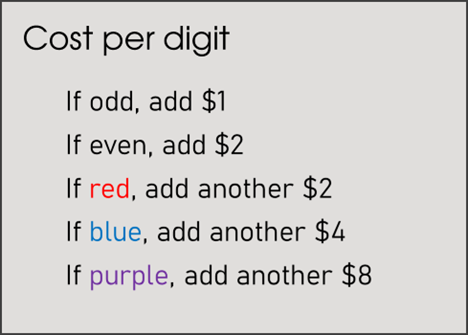

She hands you a sheet of paper. It reads:

Each card must have:

1. Three digits

2. An even number

3. A red number and a purple number

She takes a timer from her pocket and sets it for 30 seconds, telling you that is the length of your sprints.

The timer beeps when she starts it.

Somewhat confused, you grab three markers and uncap them, slide over an index card, and proceed to write, “2, 4, 6” in black, red, and purple. The user takes the card and slides it over to his left, your right. He grabs a card of his own and writes, “Yes, this works,” and places the label next to the feature.

He then looks at you expectantly.

“Are you ready for another 30-second sprint?” the moderator asks.

“Umm, can I talk to the user?” you ask.

“Of course.”

You look at the user and motion to the feature. “So…what are you looking for? Why do you like this feature?” you ask.

He nods. “We really need more purple numbers,” the user says.

The moderator starts the timer. You cap the black marker and write “2” in red, then “4, 6, 8, 10, 12” in purple. The user slides this to the same “Yes, this works” pile. The following sprint you produce three such cards, all of which are slid into the same pile.

“Do…you want more purple?” you ask.

“I love purple numbers,” he replies.

You next create a feature that has a red “2” and then runs “4” through “20” in purple. The user accepts it.

You sit back, feeling self-satisfied without really knowing why.

You then become curious: “Wait a minute,” you say to the moderator, “who is the other person in the room?”

“Oh, that’s Fred from Finance,” she replies.

“Oh,” you think to yourself. “Um, can I talk to Fred?”

“Of course.”

You look over at Fred, who still hasn’t introduced himself.

“Is…there anything you can tell me?” you ask Fred.

Without saying anything he walks toward you and places another card on the table. You scan the card. Your eyebrows go up.

“So…do you really need all these purple numbers?” you ask the user.

“Oh no, but they’re really nice to have,” he says.

You grab another index card and do some quick math. The last feature tallies to $94.

“But the requirements call for at least one purple and one red number,” you point out.

“Sure, but I can actually complete my task without them,” he says.

You flinch. “So…the requirements are wrong?” you ask.

“I guess,” the user says, as though this doesn’t matter much.

“Colored digits cost extra…but aren’t really needed?”

“Yeah….”

You think about this for a second. “What other requirements are wrong?” you ask.

The user doesn’t hesitate: “I guess all of them.” He drums his fingers on the table.

“Why give me incorrect requirements?” you ask the moderator.

She shrugs. “You really just assume assumptions are correct because they’re called requirements?”

“Well, no,” you say after a pause.

“To quote General Patton,” she says, “does scope creep or does understanding grow?”

You want to jump off a cliff.

***

OK, so what the hell’s going on here? What’s the point of this idiocy?

Well, to illustrate….

The Second Game

Now you really want to jump off a cliff, don’t you?

There is, however, no cliff in sight.

You’re back in the dimly lit room, seated at the same cafeteria style table. This time the moderator is seated across from you.

No one else is there.

“Ok,” she says, “I am thinking of a secret category. Your task is to guess what the category is.”

You sigh.

“We’ll play this game in 10-second rounds,” she says, producing the same timer. “At the beginning of each round, I will name a person who fits the secret category. That’s your freebie. For the next 10 seconds you can throw out other names and I will tell you if they also fit the category. Are you ready?”

“Sure,” you say, rolling your eyes.

“Your freebie is Thomas Jefferson.” She starts a 10-second timer.

“Umm, George Washington,” you say.

“That fits.”

“Ok, uh, Abraham Lincoln.”

“That fits.”

The timer goes off.

“Would you care to guess what the category is?” she asks.

“US presidents,” you say without hesitation.

“Good. As a percentage, how confident are you in this guess?”

“Ninety-five percent,” you say.

“Very good,” she says. “That is not correct. Your next freebie is Martin Luther King Jr.”

You guess Gandhi and Nelson Mandela. They both fit the category.

Asked to guess the category you say, “National heroes?” You say you’re 70% sure.

You’re told you’re wrong.

The next freebie is Groucho Marx.

***

OK, so what’s the point of all this?

Well, to illustrate….

This time you flatly refuse.

You’re finally learning.

Debrief: The Second Game

I originally learned the secret category game from an Agile training. I have since used it in countless classes and, well, it always plays out the same way. People guess a category based on the first freebie, then they throw out as many names as they can that they think will fit that category. Afterwards, they are close to 100% sure their guess is correct.

When they are told they are wrong, they stick with the same strategy. They do this repeatedly, even as their reported confidence level declines over time. It often takes five or six rounds for people to guess the correct category, which is, “Famous Dead People”.

This game nicely illustrates a variety of cognitive biases, allowing participants to experience them live and in real time. The first is often called the “confirmation bias”, which is really more a positive test bias. When we form a belief, when we find an explanation that seems to “connect the dots”, we tend to latch onto it and defend it in light of later, better explanations.

We all consume information through the lens of what we already happen to think. We seek “coherence” and guard our current paradigms…but notice this strategy does not actually test our beliefs. Seeking additional confirmatory evidence does not tell us whether our guesses are false. As Karl Popper stressed, if you don’t expose your thinking to falsification, then you are not being empirical.

Another bias here is that we’re typically most confident at the beginning, when our number of guesses is the smallest! This is the fallacy of small numbers. As alluded to above, we also like to pile up data that are highly correlated with each other. This makes us feel more confident even though it doesn’t improve our accuracy. In terms of information theory, it’s redundant. It’s like Wittgenstein’s joke about the man who bought 100 copies of the newspaper so he could be sure the frontpage story was true.

To figure out the secret category fast you would need to diverge and go wide. If the moderator says “Thomas Jefferson” and your guess is “US presidents”, then instead of rattling off a list of US presidents, which is a waste of time, you should say something like “Ben Affleck”!

Instead of endlessly conforming to the presumed rule, you should test it right out of the gate.

Debrief: The First Game

The first game is something I created with Agile coaches Matt D’Elia and Mark McPherson (now almost a decade ago!). I based the idea on the work of cognitive scientist P. C. Wason.

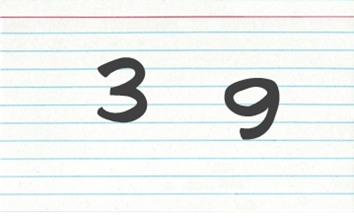

Here, all the user really needs to complete their task is numbers in ascending order—any number of numbers in ascending order. Thus, the card below would have worked, and would only have “cost” $2, as opposed to the last feature in the example, which was 47x more expensive!

The purpose here is to show how the cognitive biases in our second game can lead to solving the wrong problems and overengineering. The “requirements” were wrong to reinforce the point that, really, all you have upfront are assumptions—even if you label them otherwise.

The user kept asking for purple numbers to reinforce Rule No. 1 of interviewing users: Never ask users what they would like or what they will use. Since they cannot predict their own future behavior, whatever they tell you will not help you surface the underlying issues in the larger system at play.

Both games draw from classic research in the field of Judgment and Decision Making, especially the work of P. C. Wason and P. N. Johnson-Laird. A famous example of such a game is Wason’s “card-selection task” from the 1960s.

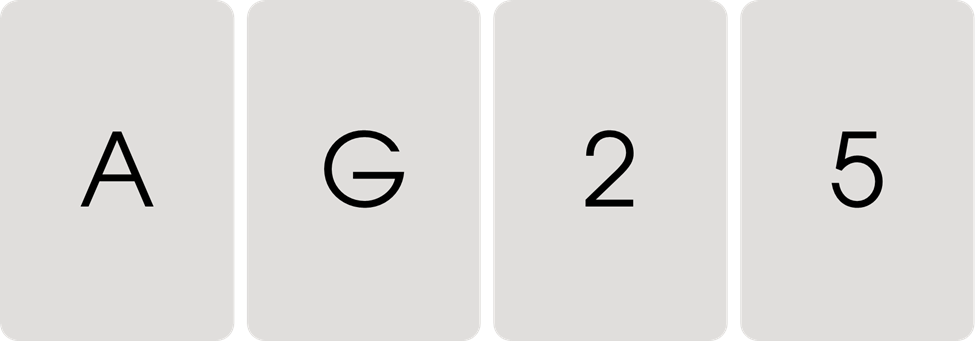

You see four cards in front of you. Each has a letter on one side and a number on the other. You are told “If there is a G on one side, then there is a 2 on the other.” Now, which two cards do you need to turn over to test this statement?

You should know by now that the answer is not the G and the 2. So, what is it? Well, it’s a conditional statement, and you want to try and debunk it, so when is a conditional statement false? A conditional, or an if-then statement, is only false when the antecedent (the “if” part) is TRUE and the consequent (the “then” part) is FALSE. Therefore, though more than 95% of people turn over the G and the 2, the correct answer is the G and the 5.

Research has shown this task is near impossible unless reframed in a socially meaningful way, so let’s do that. Here, each card has a beverage on one side and an age on the other. You are told “If there is an alcoholic beverage on one side, then the age on the other side is 21 or above.” Now, which two cards would you need to turn over to test this?

Right, hopefully this one is more obvious. (You need to turn over Vodka and 17.)

Conclusion

OK, so let’s say I’m your business stakeholder. I say to you, “I need ascending, even, purple, and red numbers, and lots of them, so get to building. I’m willing to pay.” What do you do? Do you focus on velocity and creating a “happy customer?”

Perhaps all the unnecessary waste and cost generated doesn’t matter to you. Perhaps it’s tied to a different cost center. Perhaps no one is even looking. If “happy customer” means “done”, and that’s what you’re measured on, then sure, you’ll aim for quickly creating “happy customer”.

But notice this isn’t the same thing as “maximizing value”—not in the macro it isn’t.

If instead we define “done” in terms of actually maximizing value, then to be “done” faster you would need to slow down. This reminds me of an old saying that Indi Young first brought to my attention. (See card below.) And yes, this might seem counterintuitive, but the bottom line here is that building faster than you can learn from it is waste.

If you learn nothing from what you’re building, then how do you know it’s not mostly waste? You don’t—and you probably won’t if your focus is just “happy customer”. (As readers of this blog know, this is a big problem with Agile.) To focus on velocity and not discovery and quality thus creates a kind of debt. In addition to technical debt, we can call it “learning debt”.

Consider, you can’t think outside a box you don’t even see. If you don’t challenge assumptions, then you’re not “painting the walls” of the assumption box you’re in. If you don’t see the contours of your current assumption box, then you can’t survey the larger decision landscape. That means you’re accruing unnecessary learning debt (and likely paying compound interest).

The cost here is twofold. First, the work that is done will very likely not be as impactful as it could have been. (As Don Norman cautions, if you just solve the problem that’s asked of you, then you’re probably solving the wrong problem.) Second, even if the same discoveries are still made later, it will then be more expensive to change things and less likely the new information will be acted on at all.

Solving deep issues requires sufficiently diverse failure. Premature “happy customer” comes with an opportunity cost. Failure is how you map the dimensions of the assumption box you’re in. Not all failure, of course, is created equal. Sim Sitkin was the first to outline the concept of “intelligent failure.” Working under uncertainty, learning should always be part of the objective.

Work should therefore be at least partially treated as probes, which means it must be right sized to optimize learning. If so right sized, and if you do learn from planned actions, then regardless of whether the outcome was “successful”, the planned action itself is still a success. Ignoring this is akin to only publishing “significant” results, which has fed into the current replication crisis in science.

Think about it this way. When uncertainty entails risk, and information is anything that reduces uncertainty, then information also helps to reduce risk. Where desired outcomes are highly predictable, you do not need to learn your way forward. Where desired outcomes are not highly predictable, building without optimizing for learning accrues learning debt and compounds risk.

The outcome, however, will typically not be predictable, and this cuts both ways.

First, predictable failures do not provide information about how things should be done differently.

Second, premature successes have an opportunity cost. By failing to “paint the walls”, you are still accruing learning debt.

Planning in the face of uncertainty then should focus on work as right-sized probes. To borrow a phrase from Erika Hall, it should focus on producing just enough research to drive just in time decisions.

When intelligent probes fail, there is still positive ROI in the learnings provided. These failures must be large enough, however, that the failure is noticed and felt in a way that motivates a response.

There must be ongoing work to update awareness of the assumptions at play. The learning that probes provide must be recognized as relevant to existing assumptions.

If not, then the walls remain unpainted.

The box remains invisible.

Brilliant!! I love this one. (I love most of them, but I really love this one!)